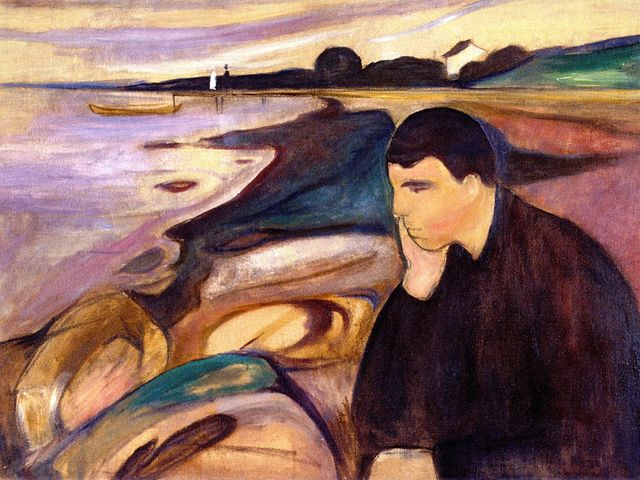

Some melancholy about the value of my work depending on decisions by others beyond my control

For the last few years, while I was employed at the Quantified Uncertainty Research Institute, a focus of my work has been on estimating impact, and on doing so in a more hardcore way, and for more speculative domains, than the Effective Altruism community was previously doing. Alas, the FTX Future Fund, which was using some of our tools, no longer exists. Open Philanthropy was another foundation which might have found value in our work, but they don’t seem to have much excitement and apetite for the “estimate everything” line of work that I was doing. So in plain words, my work seems much less valuable than it could have been [1].

Part of my mistake [2] here was to do work whose value depended on decisions by others beyond my control. And then given that I was doing that, not making sure those decisions came back positive.

I have made this mistake before, which is why it stands out to me. When I dropped out of university, it was to design a randomized controlled trial for ESPR, a rationality camp which I hoped was doing some good, but where having some measure of how much could be good to decide whether to greatly scale it. I designed the randomized trial, but it wasn’t my call to decide whether to implement it, and it wasn’t. Pathetically, some students were indeed randomized, but without gathering any pre-post data. Interesting, ESPR and similar programs, like ATLAS, did scale up, so having tracked some data could have been decision relevant.

Hopefully I will not make this mistake a third time.

Incidentally, this might be why I viscerally like programming, and particularly programming in C. When I add a feature to my rosenrot browser, the whole rigmarole is under my control. Feedback loops about whether something works or not are fast. There is no master with a veto, no consensus to build, no coalitions to navigate, no politics to play.

I don’t have a clear conclusion yet, beyond keeping this dynamic in mind. I think that giving up on the EA community is too drastic, but there is something there about losing faith, about no longer doing my best while hoping that the EA community will cover me, and instead hunkering down a bit more and adopting some measures to prevent that class of mistakes—like making sure that the research I do is tied to specific decisions, or like putting a high price tag on my work to reduce the likelihood that it is spent on stuff that doesn’t end up working out.

[1]: For what it's worth, my former boss feels that there is still much value in that type of hardcore estimation for individuals, as opposed to large organizations. Also, I did have a decent number of wins, they are just not the focus of this post.

[2]: Unclear how big vs other factors, like quality of work. Could be small.